Data Analytics: Part 1 – Data Integrity

Share

Introduction

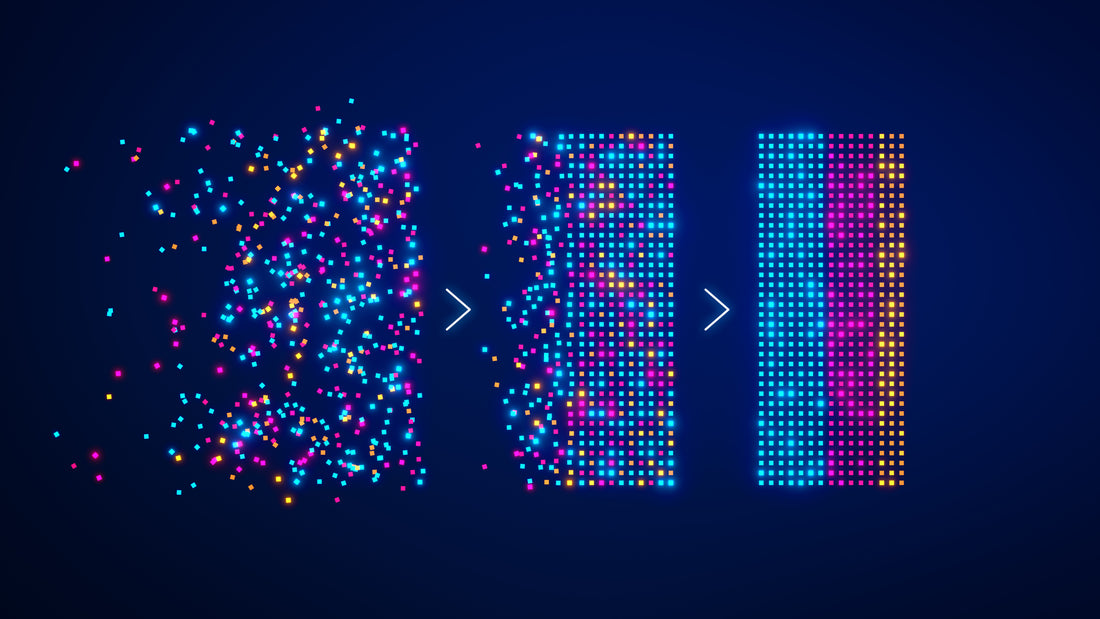

Data Analytics is (according to Investopedia): “the science of analyzing raw data to make conclusions about that information”. I understand why they use the word ‘science’, but for people like me there is also an ‘art’. I believe this because many times I find interesting relationships in the data when viewed like a mural, it is in the patterns.

When I am asked to look at data, most often it is to either look for problems (data integrity issues), run regression or to summarize based on categories. These are requests to ‘answer the question’, which has been the first step in looking through the data. However, the next step for me is always to look for patterns.

- Answer the Question: There is a given question to be answered by the data. This is the typical approach where you know what data you need to provide the answer to a question.

- Look for Patterns: Typically occurs while conducting other analysis, this is where a larger view of the data reveals hidden gems of information (correlations that were not known)

This blog is the start of a series of blogs I will produce once a month. This series will include the following regarding Data Analysis:

- Data Integrity

- Set Up the Model

- The Art

- Reporting

My approach is based on my experience, what is yours? I write these blogs in hopes that it may help others. I prefer to blog what I have learned from experience, not just a rewrite of what I found on the internet. I also prefer collaboration over critique, so please comment if you have something constructive to add.

Data Integrity

"Garbage in…" What to look for:

- Duplicates: Understand what key data can have duplicates. If a series of data is not supposed to have duplicate key values then either 1) need to evaluate the links of the data tables to summarize with the key data as a group, or 2) summarize as a group in the analysis tool. This issue happens more often than I expect.

- Sample Check: Verify records you know well or believe are at risk of being incorrect. These are records that typically fail or are more complicated. Over time these become ‘known’ to you and will become your ‘go to’ for data checks.

- Outliers: Look for anomalies. Ways to do this can be as simple as filtering the data at a particular column, then looking at the other data to see where the outliers are. This can be done through charting, or other visualization methods. Sometimes it is a simple scrolling through the data.

-

Record Count: If the system has 26,178 records with filters to match the data pull, then there better be 26,178 records in the data pull. Research any anomalies that would cause the record count not to match. Can include:

- pulling the wrong table. Sometimes the data we need is in more than one table, but let’s be sure to capture from the representative table.

- export filters are not correct. Things like date range, if not selecting based on the correct field or incorrect range.

- link to table is restricting results (possible inner link issue). This is where one table with all records is inner linked to another table that has a portion of the key data, then the output would restrict to only show the common key data. If you want only the restricted data, then not an issue, perhaps only linking to the larger data for other information. But if you need the larger data, then an outer link may be best.

- link to another table may be causing duplicates. The data has a link with a 1 to many relationship that causes records to duplicate (see Duplicates issue). In those cases, if you don’t want duplicates, then you need to group the data and summarize.

- output restrictions can be built into the exporting system. You may need to adjust the export limits, or export filter in parts that can be combined or union for a total count to match.

- Totals: Aside from record count, if the totals don’t match the system, then further review that you are pulling the correct field, otherwise it can be in the UOM (unit of measure) or other conversions like currency or how something is packed/inventoried/shipped. Think through ‘what can affect the data result in the system’.

- Data Source: Where is the data coming from? For each field, what is the source:

- Is the data coming from the ‘master’ source or is it a ‘slave’ to the source? Any time you can pull from the master source, best to do so, unless you are testing another database for integrity. Taking from a master source reduces the risk of invalid data.

- Is the data a ‘key’ field, that is enforced in the source system? This helps give credibility to the information, based on a set systematic standard.

If you have other methods for reviewing data integrity, please share.

About the Author:

Travis King first worked with data in the defense industry starting in 1996. Becoming an Excel expert, he was invited into projects working through data. Large data projects required using other databases, further expanding the size of data for review. He has led projects in forecasting financials, running market data, massive software migrations to SAP, and conducting data integrity audits. Travis worked in beverage distribution, defense, automotive, software and recreation industries.

Contact Us to learn more about this topic!